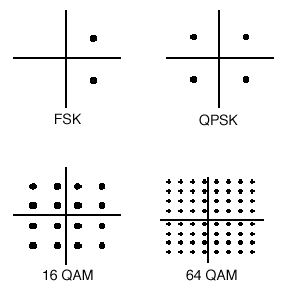

The book covers the theory of probabilistic information measures and application to coding theorems for information sources and noisy channels. This is an uptodate treatment of traditional information theory emphasizing ergodic theory. It is a selfcontained introduction to all basic results in the theory of information and coding. This volume can be used either for selfstudy, or for a level course at university. Date Topics Covered Assignment Solutions Remarks: Sep 17: Public Holiday: Sep 18 Introduction to Source Coding Nonsingular codes Uniquely decodable codes Don't show me this again. This is one of over 2, 200 courses on OCW. Find materials for this course in the pages linked along the left. MIT OpenCourseWare is a free open publication of material from thousands of MIT courses, covering the entire MIT curriculum. This fundamental monograph introduces both the probabilistic and algebraic aspects of information theory and coding. It has evolved from the authors' years of experience teaching at the undergraduate level, including several Cambridge Maths Tripos courses. Information Theory and Coding 1. The capacity of a bandlimited additive white Gaussian (AWGN) channel is given by 2(1 2) bits per second(bps), where W is the channel English. Summary The mathematical principles of communication that govern the compression and transmission of data and the design of efficient methods of doing so. So coding theory is the study of how to encode information (or behaviour or thought, etc. It also has to do with methods of deleting noise in the environment, so that the original message can be received clearly. 6TH SEM INFORMATION THEORY AND CODING (06EC65) Dept. of ECE, SJBIT, Blore 60 5 Unit 1: Information Theory 1. 1 Introduction: Communication Communication involves explicitly the transmission of information from one point to another. Rent and save from the world's largest eBookstore. Read, highlight, and take notes, across web, tablet, and phone. Information Theory and Coding Lecture 1 Pavan Nuggehalli Probability Review Origin in gambling Laplace combinatorial counting, circular discrete geometric probability continuum A N Kolmogorous 1933 Berlin Notion of an experiment Let be the set of all. The declaration of the copyright is at the bottom of this page. Please, don't hesitate to contact me at if you have any questions or if you need more information. A Student's Guide to Coding and Information Theory. This textbook is thought to be an easytoread introduction to coding and information theory for students at the freshman level or for nonengineering major students. Since then, information theory has kept on designing devices that reach or approach these limits. Here are two examples which illustrate the results obtained with information theory methods Thanks to techniques of coding (run length coding, Huffman coding), the time of transmission With information theory as the foundation, Part II is a comprehensive treatment of network coding theory with detailed discussions on linear network codes, convolutional network. This book is offers a comprehensive overview of information theory and error control coding, using a different approach then in existed literature. Information Theory and Coding (Video) L1 Introduction to Information Theory and Coding; Modules Lectures. L1 Introduction to Information Theory and Coding; L2Definition of Information Measure and Entropy; L3Extention of. Information Theory from The Chinese University of Hong Kong. The lectures of this course are based on the first 11 chapters of Prof. Raymond Yeungs textbook entitled Information Theory and Network Coding (Springer 2008). Information theory coding (ECE) 1. INFORMATION THEORY AND CODING Nitin Mittal Head of Department Electronics and Communication Engineering Modern Institute of Engineering Technology Mohri, Kurukshetra BHARAT PUBLICATIONS 135A, Santpura Road, Yamuna Nagar. INTRODUCTION TO INFORMATION THEORY ch: introinfo This chapter introduces some of the basic concepts of information theory, as well as the denitions and notations of probabilities that will be used throughout the book. The notion of entropy, which is fundamental to the whole topic of Information Theory and Coding J G Daugman Prerequisite courses: Probability; Mathematical Methods for CS; Discrete Mathematics Aims The aims of this course are to introduce the principles and applications of information theory. Information Coding Theory Topics: Introduction to Information Coding Theory, DMS, BSC By Waheeduddin Hyder visit for more videos This is the notes for PartB in Information theory and coding subject for 5th sem EC students under VTU. Information Theory and Coding 10EC55 PART A Unit 1: Information Theory Syllabus: Introduction, Measure of information, Average information content of symbols in long Information theory, coding and cryptography are the three loadbearing pillars of any digital communication system. In this introductory course, we will start with the basics of information theory and source coding. Information Theory, Excess Entropy and Statistical Complexity by David Feldman College of the Atlantic This ebook is a brief tutorial on information theory, excess entropy and statistical complexity. Information Theory: Coding Theorems for Discrete Memoryless Systems Dec 1, 1997. by Imre Csiszar and Janos Korner. 71 (3 used new offers) The Theory of Information and Coding: Student Edition (Encyclopedia of Mathematics and its Applications) Aug 2, 2004. INFORMATION THEORY AND CODING BY EXAMPLE This fundamental monograph introduces both the probabilistic and the algebraic aspects of information theory and coding. Prologue This book is devoted to the theory of probabilistic information measures and their application to coding theorems for information sources and noisy channels. Information is the source of a communication system, whether it is analog or digital. Information theory is a mathematical approach to the study of coding of information along with the quantification, storage, and communication of information. With information theory as the foundation, Part II is a comprehensive treatment of network coding theory with detailed discussions on linear network codes, convolutional network. The information channels, as well as the sources, generally are discrete or continuous. Physical communication channel is, by its nature, a continuous one. Information Theory and Coding by Prof. Merchant, Department of Electrical Engineering, IIT Bombay. For more details on NPTEL visit. Information Theory and Network Coding SPIN Springers internal project number, if known January 31, 2008 Springer. Preface This book is an evolution from my book A First Course in Information Theory published in 2002 when network coding was still at its infancy. The last few An Introduction to Information Theory and Applications F. Kohlas Information theory, in the technical sense, as it is used today goes back to the work information theory to coding, communication and other domains. The book provides a comprehensive treatment of Information Theory and Coding as required for understanding and appreciating the basic concepts. It starts with the mathematical prerequisites and then uncovers major topics by way of different chapters Called the Joint Source Channel Coding Theorem. Information Theory addresses the following questions: Given a source, how much can I compress the data? Given a channel, how noisy can the channel be, or how much redundancy is necessary to minimize Past exam papers: Information Theory and Coding. Solution notes are available for many past questions. They were produced by question setters, primarily for the benefit of the examiners. Even if information theory is considered a branch of communication the ory, it actually spans a wide number of disciplines including computer science, probability, statistics, economics, etc. Information Theory and Coding 10EC55 PART A Unit 1: Information Theory Syllabus: Introduction, Measure of information, Average information content of symbols in long independent sequences, Average information content of symbols in long dependent sequences. The test carries questions on Information Theory Source Coding, Channel Capacity Channel Coding, Linear Block Codes, Cyclic Codes, BCH RS Codes, Convolutional Codes, Coding Modulation etc. 1 mark is awarded for each correct answer and 0. 25 mark will be deducted for each wrong answer. 441 offers an introduction to the quantitative theory of information and its applications to reliable, efficient communication systems. Topics include mathematical definition and properties of information, source coding theorem, lossless compression of data, optimal lossless coding, noisy communication channels, channel coding theorem, the source channel separation theorem, multiple access. Information Theory and Channel CapacityMeasure of Information, Average Information Content of Symbols in Long Independent Sequences, Average Information Content of Symbols in Long Dependent Sequences, Markoff Statistical Model for Information Sources, Entropy and Information Rate of Markoff Sources, Encoding of the Source Output, Shannon s Encoding Algorithm, Communication Channels. This course in concerned with the fundamental limits of communication. This course will guide students through various Data compression Techniques and the Coding Theory part is concerned with practical techniques to realize the limits specified by information theory. We have compiled a list of Best Reference Books on Information Theory and Coding Subject. These books are used by students of top universities, institutes and colleges. Here is the full list of best reference books on Information Theory and Coding. Information Theory and Coding by N Abramson V. and information geometry in general. data compression to the entropy limit (source coding) and various source coding algorithms and differential entropy. including computing the capacity of a channel. PDF The book provides a comprehensive treatment of Information Theory and Coding as required for understanding and appreciating the basic concepts. It starts with the mathematical prerequisites. Information Theory INFORMATION THEORY AND THE DIGITAL AGE AFTAB, CHEUNG, KIM, THAKKAR, YEDDANAPUDI 6. What made possible, what induced the development of coding as a theory, and the development of very complicated codes, was Shannon's Theorem: he told.